Classification And Regression Trees Free Software

A recursive partitioning tree showing survival of passengers on the ('sibsp' is the number of spouses or siblings aboard). The figures under the leaves show the probability of survival and the percentage of observations in the leaf. Summarizing: Your chances of survival were good if you were (i) a female or (ii) a young boy without several family members.Recursive partitioning methods have been developed since the 1980s. Well known methods of recursive partitioning include Ross Quinlan's and its successors,. Contents.Advantages and disadvantages Compared to other multivariable methods, recursive partitioning has advantages and disadvantages.

Advantages are:. Generates clinically more intuitive models that do not require the user to perform calculations. Allows varying prioritizing of misclassifications in order to create a decision rule that has more or. May be more accurate. Disadvantages are:.

Does not work well for continuous variables. May overfit data.Examples Examples are available of using recursive partitioning in research of diagnostic tests.

Goldman used recursive partitioning to prioritize in the diagnosis of among patients with chest pain in the emergency room. See also.References. Breiman, Leo (1984). Classification and Regression Trees. Boca Raton: Chapman & Hall/CRC. ^ Cook EF, Goldman L (1984).

'Empiric comparison of multivariate analytic techniques: advantages and disadvantages of recursive partitioning analysis'. Journal of Chronic Diseases.

37 (9–10): 721–31. James KE, White RF, Kraemer HC (2005).

'Repeated split sample validation to assess logistic regression and recursive partitioning: an application to the prediction of cognitive impairment'. Statistics in Medicine. 24 (19): 3019–35. Kattan MW, Hess KR, Beck JR (1998). 'Experiments to determine whether recursive partitioning (CART) or an artificial neural network overcomes theoretical limitations of Cox proportional hazards regression'.

31 (5): 363–73. Lee JW, Um SH, Lee JB, Mun J, Cho H (2006).

'Scoring and staging systems using cox linear regression modeling and recursive partitioning'. Methods of Information in Medicine. 45 (1): 37–43. Fonarow GC, Adams KF, Abraham WT, Yancy CW, Boscardin WJ (2005).

'Risk stratification for in-hospital mortality in acutely decompensated heart failure: classification and regression tree analysis'. 293 (5): 572–80. Stiell IG, Wells GA, Vandemheen KL, et al. 'The Canadian C-spine rule for radiography in alert and stable trauma patients'. 286 (15): 1841–8.

Haydel MJ, Preston CA, Mills TJ, Luber S, Blaudeau E, DeBlieux PM (2000). 'Indications for computed tomography in patients with minor head injury'. 343 (2): 100–5.

Edworthy SM, Zatarain E, McShane DJ, Bloch DA (1988). 'Analysis of the 1982 ARA lupus criteria data set by recursive partitioning methodology: new insights into the relative merit of individual criteria'.

15 (10): 1493–8. Stiell IG, Greenberg GH, Wells GA, et al.

Decision Tree Software Mac

'Prospective validation of a decision rule for the use of radiography in acute knee injuries'. 275 (8): 611–5. ^ Goldman L, Weinberg M, Weisberg M, et al. 'A computer-derived protocol to aid in the diagnosis of emergency room patients with acute chest pain'. 307 (10): 588–96.

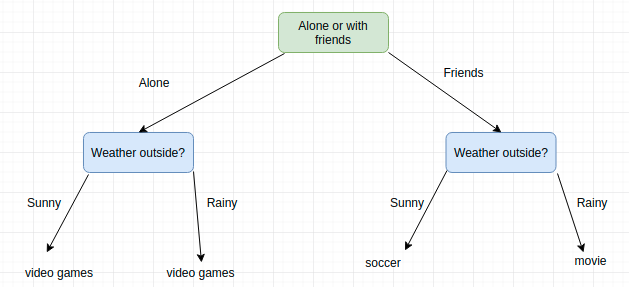

.Decision tree learning is one of the predictive modeling approaches used in,. It uses a (as a ) to go from observations about an item (represented in the branches) to conclusions about the item's target value (represented in the leaves). Tree models where the target variable can take a discrete set of values are called classification trees; in these tree structures, represent class labels and branches represent of features that lead to those class labels.

Decision trees where the target variable can take continuous values (typically ) are called regression trees.In decision analysis, a decision tree can be used to visually and explicitly represent decisions. In, a decision tree describes data (but the resulting classification tree can be an input for ). This page deals with decision trees in. An example tree which estimates the probability of after surgery, given the age of the patient and the vertebra at which surgery was started. The same tree is shown in three different ways.

Left The colored leaves show the probability of kyphosis after surgery, and percentage of patients in the leaf. Middle The tree as a perspective plot.

Right Aerial view of the middle plot. The probability of kyphosis after surgery is higher in the darker areas. (Note: The treatment of has advanced considerably since this rather small set of data was collected. ) Decision tree types Decision trees used in are of two main types:.

analysis is when the predicted outcome is the class (discrete) to which the data belongs. Regression tree analysis is when the predicted outcome can be considered a real number (e.g. The price of a house, or a patient's length of stay in a hospital).The term Classification And Regression Tree (CART) analysis is an used to refer to both of the above procedures, first introduced by et al. Trees used for regression and trees used for classification have some similarities - but also some differences, such as the procedure used to determine where to split.Some techniques, often called ensemble methods, construct more than one decision tree:. Incrementally building an ensemble by training each new instance to emphasize the training instances previously mis-modeled. A typical example is. Rokach, Lior; Maimon, O.

Data mining with decision trees: theory and applications. World Scientific Pub Co Inc. Shalev-Shwartz, Shai; Ben-David, Shai (2014). Decision Trees'. Cambridge University Press.

Quinlan, J. Machine Learning. 1: 81–106. ^ Breiman, Leo; Friedman, J. H.; Olshen, R. A.; Stone, C.

Classification and regression trees. Monterey, CA: Wadsworth & Brooks/Cole Advanced Books & Software. Friedman, J. Stanford University.

Hastie, T., Tibshirani, R., Friedman, J. The elements of statistical learning: Data mining, inference, and prediction. New York: Springer Verlag. Breiman, L.

'Bagging Predictors'. Machine Learning. 24 (2): 123–140. Rodriguez, J. J.; Kuncheva, L.

I.; Alonso, C. 'Rotation forest: A new classifier ensemble method'.

IEEE Transactions on Pattern Analysis and Machine Intelligence. 28 (10): 1619–1630. Rivest, Ron (Nov 1987). Machine Learning. 3 (2): 229–246. Letham, Ben;; McCormick, Tyler; Madigan, David (2015).

'Interpretable Classifiers Using Rules And Bayesian Analysis: Building A Better Stroke Prediction Model'. Annals of Applied Statistics. 9 (3): 1350–1371.:. Wang, Fulton; (2015). Journal of Machine Learning Research. 38. Kass, G.

'An exploratory technique for investigating large quantities of categorical data'. Applied Statistics. 29 (2): 119–127. ^ Hothorn, T.; Hornik, K.; Zeileis, A. 'Unbiased Recursive Partitioning: A Conditional Inference Framework'. Journal of Computational and Graphical Statistics.

15 (3): 651–674. ^ Strobl, C.; Malley, J.; Tutz, G. Psychological Methods. 14 (4): 323–348.

Rokach, L.; Maimon, O. 'Top-down induction of decision trees classifiers-a survey'. IEEE Transactions on Systems, Man, and Cybernetics - Part C: Applications and Reviews. 35 (4): 476–487. ^ Witten, Ian; Frank, Eibe; Hall, Mark (2011). Burlington, MA: Morgan Kaufmann. Pp. 102–103.

^ Gareth, James; Witten, Daniela; Hastie, Trevor; Tibshirani, Robert (2015). An Introduction to Statistical Learning.

New York: Springer. P. 315. Mehtaa, Dinesh; Raghavan, Vijay (2002). 'Decision tree approximations of Boolean functions'.

Theoretical Computer Science. 270 (1–2): 609–623. Hyafil, Laurent; Rivest, RL (1976).

'Constructing Optimal Binary Decision Trees is NP-complete'. Information Processing Letters.

5 (1): 15–17. Murthy S. Data Mining and Knowledge Discovery. Ben-Gal I.

Dana A., Shkolnik N. And Singer (2014). Quality Technology & Quantitative Management. 11 (1): 133–147. Principles of Data Mining.

2007. Deng, H.; Runger, G.; Tuv, E. Proceedings of the 21st International Conference on Artificial Neural Networks (ICANN). Pp. 293–300. Brandmaier, Andreas M.; Oertzen, Timo von; McArdle, John J.; Lindenberger, Ulman (2012).

Psychological Methods. 18 (1): 71–86. Painsky, Amichai; Rosset, Saharon (2017).

'Cross-Validated Variable Selection in Tree-Based Methods Improves Predictive Performance'. IEEE Transactions on Pattern Analysis and Machine Intelligence. 39 (11): 2142–2153.:. Papagelis, A.; Kalles, D. Proceedings of the Eighteenth International Conference on Machine Learning, June 28–July 1, 2001.

Pp. 393–400. Barros, Rodrigo C.; Basgalupp, M. P.; Carvalho, A.

F.; Freitas, Alex A. 'A Survey of Evolutionary Algorithms for Decision-Tree Induction'. IEEE Transactions on Systems, Man and Cybernetics. Part C: Applications and Reviews. 42 (3): 291–312. Chipman, Hugh A.; George, Edward I.; McCulloch, Robert E. 'Bayesian CART model search'.

Journal of the American Statistical Association. 93 (443): 935–948. Barros, R. C.; Cerri, R.; Jaskowiak, P.

A.; Carvalho, A. 'A bottom-up oblique decision tree induction algorithm'. Proceedings of the 11th International Conference on Intelligent Systems Design and Applications (ISDA 2011). Pp. 450–456.Further reading.

James, Gareth; Witten, Daniela; Hastie, Trevor; Tibshirani, Robert (2017). An Introduction to Statistical Learning: with Applications in R. New York: Springer. Pp. 303–336.External links. From O'Reilly.

using Microsoft Excel., a page with commented links.